At the Supercomputing ‘23 conference in Denver on Nov. 13, NVIDIA announced expanded availability of the NVIDIA GH200 Grace Hopper Superchip for high-performance computing and HGX H200 Systems and Cloud Instances for AI training.

Jump to:

- NVIDIA HGX GH200 supercomputer enhances generative AI and high-performance computing workloads

- NVIDIA’s GH200 chip is suited to supercomputing and AI training

NVIDIA HGX GH200 supercomputer enhances generative AI and high-performance computing workloads

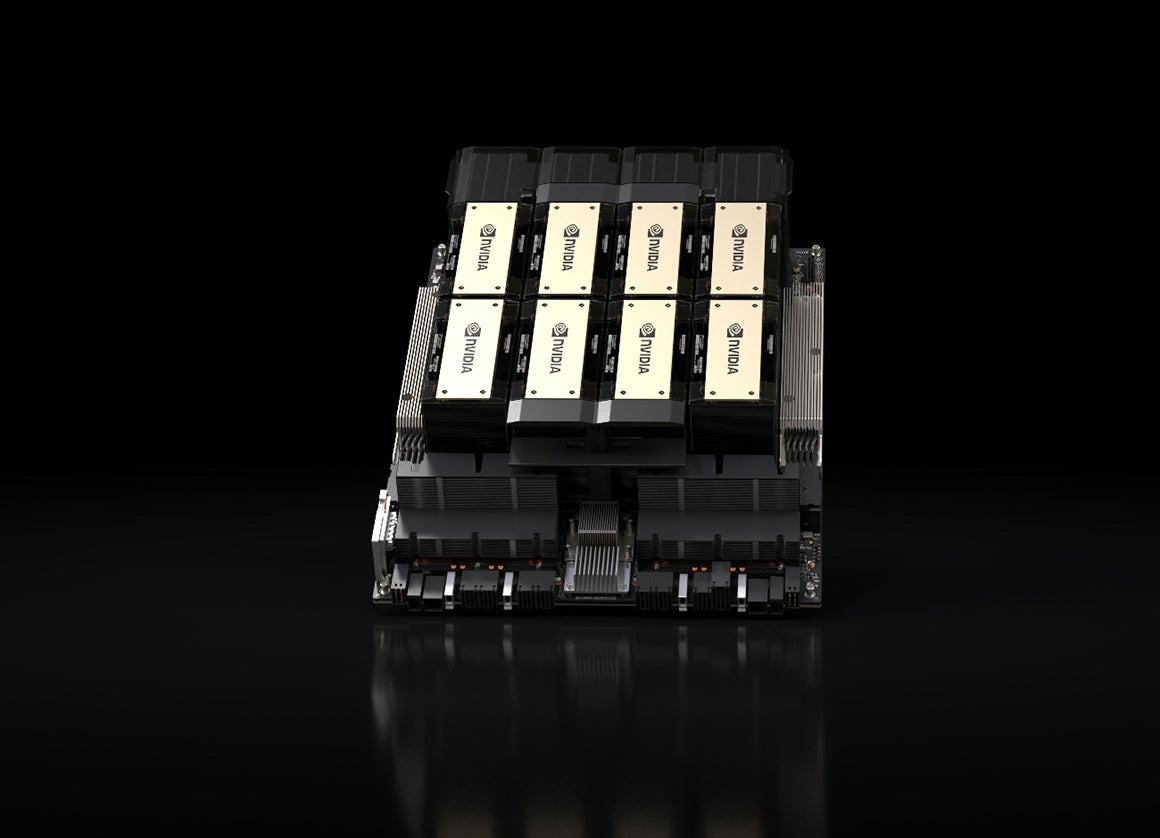

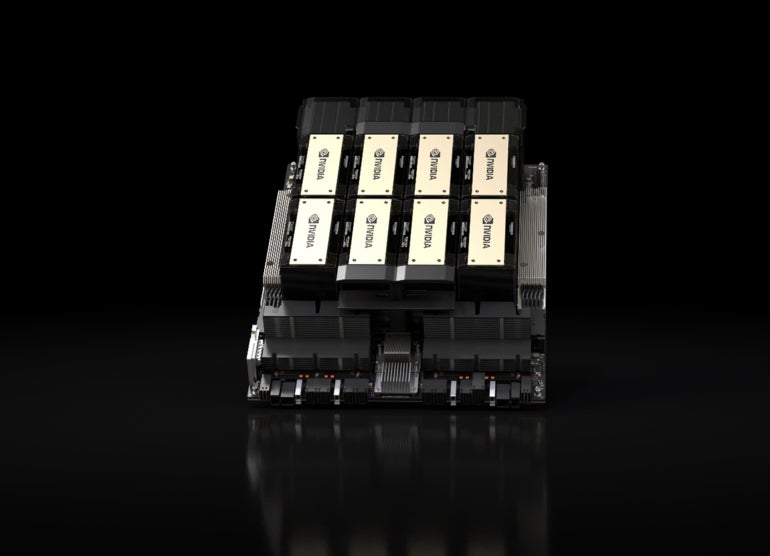

The HGX GH200 supercomputing platform, which is built on the NVIDIA H200 Tensor Core GPU, will be available through server manufacturers and hardware providers that have partnered with NVIDIA. The HGX GH200 is expected to start shipping from cloud providers and manufacturers in Q2 2024.

Amazon Web Services, Google Cloud, Microsoft Azure, CoreWeave, Lambda, Vultr and Oracle Cloud Infrastructure will offer H200-based instances in 2024.

NVIDIA HGX H200 features the following:

- NVIDIA H200 Tensor Core GPU for generative AI and high-performance computing workloads that require massive amounts of memory (141 GB of memory at 4.8 terabytes per second).

- Doubling inference speed on Llama 2, a 70 billion-parameter LLM, compared to the NVIDIA H100.

- Interoperable with the NVIDIA GH200 Grace Hopper Superchip with HBM3e.

- Deployable in any type of data center, including on servers with existing partners ASRock Rack, ASUS, Dell Technologies, Eviden, GIGABYTE, Hewlett Packard Enterprise, Ingrasys, Lenovo, QCT, Supermicro, Wistron and Wiwynn.

- Can provide inference and training for the largest LLM models beyond 175 billion parameters.

- Over 32 petaflops of FP8 deep learning compute and 1.1TB of aggregate high-bandwidth memory.

“To create intelligence with generative AI and HPC applications, vast amounts of data must be efficiently processed at high speed using large, fast GPU memory,” said Ian Buck, vice president of hyperscale and HPC at NVIDIA, in a press release.

NVIDIA’s GH200 chip is suited to supercomputing and AI training

NVIDIA will now offer HPE Cray EX2500 supercomputers with the GH200 chip (Figure A) for enhanced supercomputing and AI training. HPE announced a supercomputing solution for generative AI made up in part of NVIDIA’s HPE Cray EX2500 supercomputer configuration.

Figure A

The GH200 includes Arm-based NVIDIA Grace CPU and Hopper GPU architectures using NVIDIA NVLink-C2C interconnect technology. The GH200 will be packaged inside systems from Dell Technologies, Eviden, Hewlett Packard Enterprise, Lenovo, QCT and Supermicro, NVIDIA announced at Supercomputing ’23.

SEE: NVIDIA announced AI training-as-a-service in July (TechRepublic)

“Organizations are rapidly adopting generative AI to accelerate business transformations and technological breakthroughs,” said Justin Hotard, executive vice president and general manager of HPC, AI and Labs at HPE, in a blog post. “Working with NVIDIA, we’re excited to deliver a full supercomputing solution for generative AI, powered by technologies like Grace Hopper, which will make it easy for customers to accelerate large-scale AI model training and tuning at new levels of efficiency.”

What can the GH200 enable?

Projects like HPE’s show that supercomputing has applications for generative AI training, which could be used in enterprise computing. The GH200 interoperates with the NVIDIA AI Enterprise suite of software for workloads such as speech, recommender systems and hyperscale inference. It could be used in conjunction with an enterprise’s data to run large language models trained on the enterprise’s data.

NVIDIA makes new supercomputing research center partnerships

NVIDIA announced partnerships with supercomputing centers around the world. Germany’s Jülich Supercomputing Centre’s scientific supercomputer, JUPITER, will use GH200 superchips. JUPITER will be used to create AI foundation models for climate and weather research, material science, drug discovery, industrial engineering and quantum computing for the scientific community. The Texas Advanced Computing Center’s Vista supercomputer and the University of Bristol’s upcoming Isambard-AI supercomputer will also use GH200 superchips.

A variety of cloud providers offer GH200 access

Cloud providers Lambda and Vultr offer NVIDIA GH200 in early access now. Oracle Cloud Infrastructure and CoreWeave plan to offer NVIDIA GH200 instances in the future, starting in Q1 2024 for CoreWeave; Oracle did not specify a date.